VOO has completed Memento, its Business Intelligence and Big Data transformation programme, which involved a migration to the cloud. Find out how Micropole supported the Belgian operator during the various phases of the project.

VOO's challenges and issues

As part of a global transformation, Micropole supported the Belgian operator VOO in the complete transformation of its Business Intelligence services, its Big Data and AI environment, and in its migration to the cloud. This migration was essential to meet strategic and urgent operational needs, including :

- Significantly increase customer knowledge to accelerate acquisition and improve retention

- Supporting digital transformation by providing a single view of the customer and his behaviour

- Responding to new compliance challenges (RGPD)

- Drastically reduce the total cost of ownership (TCO) of the data environments (4 different BI environments + 3 Hadoop clusters before the transformation)

- Implement enterprise-wide data governance and address the additional cost of shadow-BI: more than 25 FTEs accounted for in business teams for data crunching.

The solutions and results generated by Micropole

Micropole conducted a short study to analyse all aspects of the transformation, addressing both organisational challenges (roles and responsibilities, teams and skills, processes, governance) and technical challenges (holistic architectural scenarios, ranging from hybrid cloud to complete PaaS cloud solutions).

Based on the results of this study, Micropole deployed an enterprise-wide cloud-based data platform that combines traditional business intelligence processes with high-level analytical capabilities.

Micropole helped redefine the data organization and associated processes, and introduced data governance throughout the enterprise.

TCO has been reduced by 70% while agility, processing and ideation capabilities have improved significantly.

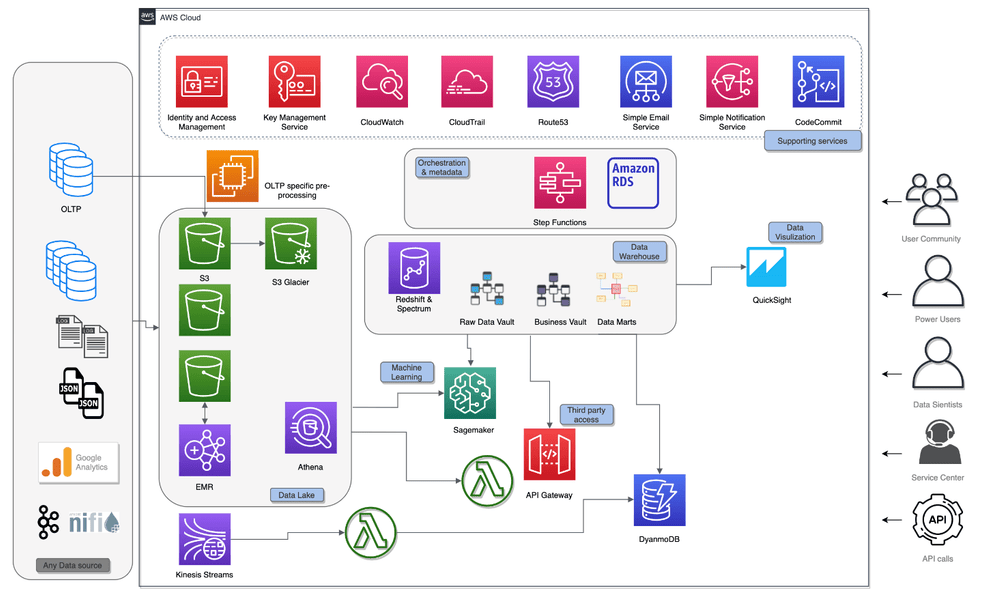

An architecture based on AWS key data services

Data lake

Amazon S3 is used for the core ingest layer and provides long-term persistence.

Some data files are prepared on Amazon EMR. EMR clusters are created on the fly several times a day. The clusters only process new data coming in on S3. Once the data is processed and stored in an Apache Parquet format optimised for analysis, the cluster is destroyed. Encryption and lifecycle management are enabled on most S3 buckets to meet security and cost efficiency requirements. Over 600 TB of data is currently stored in the Data Lake. Amazon Athena is used to create and maintain a data catalogue and explore the raw data in the Data Lake.

Real-time ingestion

Amazon Kinesis Data Streams captures real-time data, which is filtered and enriched by a Lambda function with data from the data warehouse, before being stored in an Amazon DynamoDB database. Real-time data is also stored in dedicated S3 buckets for persistence.

Data Warehouse

The Data Warehouse runs on Amazon Redshift, using the new RA3 nodes and following the Data Vault 2.0 modeling methodology. The Data Vault objects are highly standardised and have strict modelling rules, allowing a high level of standardisation and automation of the data warehouse. The data model is generated from the metadata stored in an Amazon RDS Aurora database.

The automation engine itself is built on Apache Airflow, deployed on EC2instances.

The project implementation began in June 2017; the production Redshift cluster initially scaled to 6 DC2 nodes has evolved seamlessly over time and has consistently met the growing data needs of the project and all business expectations.

DynamoDB

Amazon DynamoDB is used for specific cases where web applications require sub-second response times. The use of DynamoDB read/write capacity provides exceptional, but more expensive, read capacity that is only used during service hours when low latency and instantaneous response time are required. These mechanisms, based on the flexibility of AWS services, help to optimise the monthly AWS bill.

Machine Learning

A series of predictive models were implemented, ranging from a classic attrition prediction model to more advanced use cases. For example, a model was cleverly designed to identify customers likely to be affected by a network failure. Amazon SageMaker was used to create, train and deploy the models at scale, leveraging the data available in the Data Lake (Amazon S3) and Data Warehouse (Amazon Redshift).

External access to APIs

For regulatory purposes, external actors need to access specific data sets in a secure, traceable and reliable way. Amazon API Gateway is used to deploy secure REST APIs, in addition to serverless data microservices implemented with Lambda functions.

And much more!

The data platform that Micropole has built for VOO offers many other possibilities. The very rich range of services available on the AWS environment means that new use cases can be handled quickly and efficiently every day.

To find out more about this disruptive project successfully carried out by Micropole and to discover all the phases of the project, read this article by Alain de Fooz published in Solutions Magazines.