By Stéphanie De la Asuncion, BI Project Director, Mickaël Mackow, BI Project Manager and Vivien Liochon, MSBI Technical Expert - Micropole Centre-Est.

In early 2018, Microsoft released a data integration service hosted entirely in the cloud - Azure Data Factory. Allowing to orchestrate services that collect raw data, this solution also transforms it into ready-to-use information. In this article, we will take a look at this service by describing its interests, its limitations and its use in a concrete framework: which will include the steps for creating a feed under Azure Data Factory.

For several months or even years, Microsoft has been involved in a Cloud Computing approach to offer more and more Azure services and push companies to follow suit. The benefit: to have a single global management platform bringing together all the essential services for the proper functioning of the company's information system.

Azure Data Factory is a service that will allow companies to no longer have to manage physical or virtual machines to process their data. The main interest of this new feature is to avoid the costs of maintenance, software installations, etc.. It is still possible to process, transform, store, delete and archive data with SSIS and Azure storage spaces.

AZURE DATA FACTORY: APPLYING IT IN THE ENTERPRISE

- Context of the project at one of our customers

Our experts set up a BI tool with nightly feeds, made with Microsoft technologies hosted on Azure virtual machines. Once this tool was in place, the evolution requests led to new daily flows that had to be executed during the day.

In order to limit the cost of using the Azure servers, the jobs were scheduled to stop the servers at the end of the processing. The new flows generated also posed a problem for us: managing the startup and shutdown of the servers.

This constraint led us to explore Azure Data Factory. As a service, there is no server management to be expected and the new simple data flows were therefore developed with it.

The objectives were to:

- Validate the use of this tool in service mode;

- In the long term, separate from the virtual machine infrastructure;

- Store data from various sources in a consolidated Azure space;

- Launch SSIS data processing flows without having to manage a server;

- Schedule the entire supply flow

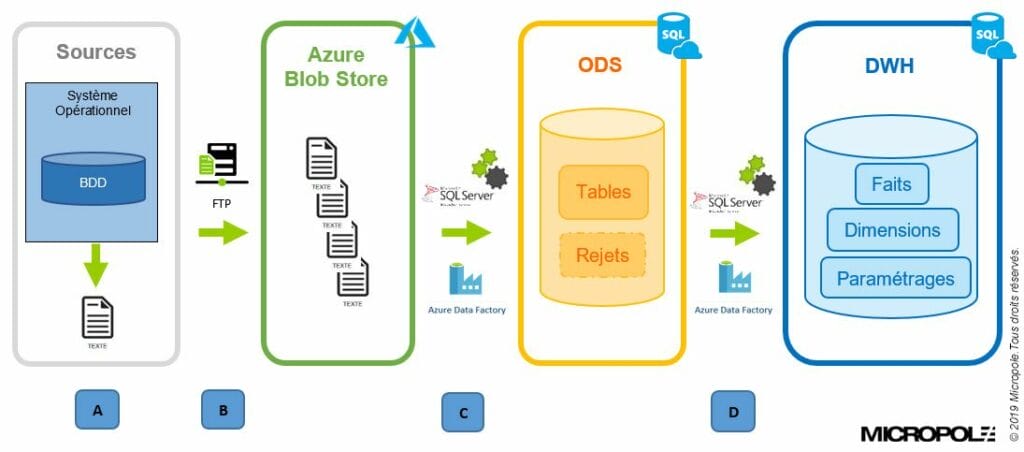

To present the architecture of the project, here is the diagram that includes the Azure environment in the implementation of the global data integration.

A. Extract data into files

B. FTP transfer of files to the Azure blob store

C. Integration of files into the ODS (Operational Data Store) via SSIS packages launched from Azure Data Factory

D. Integration of ODS data into the DWH (Datawarehouse) via SSIS packages launched from Azure Data Factory

- How the Azure Data Factory works

Comparative diagram between the operation of a flow via a server and an ADF flow

Note that the only cost for Azure Data Factory is the SSIS Integration Runtime. This is a node that allows you to launch SSIS feeds to which you attach a SQL Server license. It is also possible to configure performance based on usage and data.

REVIEW AND OUTLOOK

By accompanying our client on its BI project, our experts were able to highlight the tops and flops of this recent solution such as:

The advantages:

- A delocalized service;

- An easy handling for simple flows;

- Limited costs;

- A development or a license management that does not require the installation of a tool;

- A scheduler: possibility to plan the flows in a periodic way or an event

Disadvantages:

- A tedious management of environments (production/receipt/development/...);

- Requires particular attention at the start of the service: it is necessary to think of an automatic stop otherwise the costs increase very quickly;

- All the functionalities are not yet available and it is thus difficult to make complex flows.

However, Azure Data Factory is a solution that makes it easy to create, manage and monitor the feed processes in a single service.

No doubt that in the coming months (new versions of the tool in V2), the service will evolve to integrate native components that will not require specific development: the deletion of files in an Azure vault, the start/stop of the SSIS Integration Runtime and many others ... To be continued.